What is MLOps?

Machine Learning Operations (MLOps) is a new term that’s gaining momentum as companies embrace machine learning and AI. But if you’re not in the industry or familiar with it, here’s what you need to know: MLOps refers to the operations side of machine learning and AI.

MLOps is a set of practices and processes that enable organizations to effectively manage the development, deployment, and maintenance of machine learning models. MLOps is like a cookbook that helps you make sure this entire process is done consistently, efficiently, and effectively.

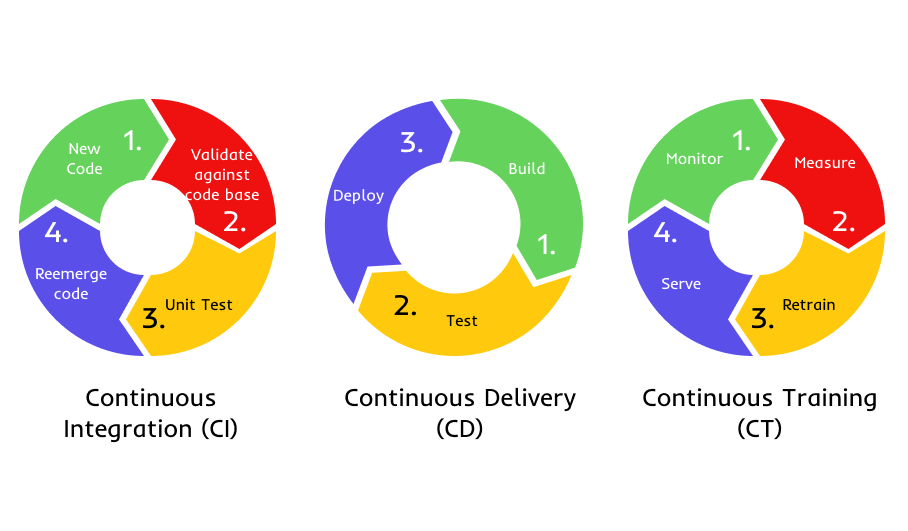

At its core, MLOps is about automating the entire machine learning lifecycle from data collection, data preparation, model experimentation, training and evaluation, deployment and monitoring. By automating these processes, organizations can reduce the time and effort required to develop and deploy models, while also ensuring that models are accurate and reliable.

In this article, we’ll discuss how MLOps can help data teams and organizations enhance their machine learning capabilities. We’ll also provide an overview of the key components of MLOps, discuss where to start when implementing MLOps in your organization and what tools to use for each step.

Why do we need it?

MLOps aims to provide scalability, reliability, and security for machine learning and AI initiatives. Here are four reasons why MLOps is considered a crucial aspect of these initiatives:

Scalability

MLOps supports the standardization of environments, optimization of resource allocation, effective management of infrastructure, seamless integration of processes, and implementation of Continuous Integration and Continuous Deployment (CI/CD), making it easier to scale machine learning models as demand increases.

Reproducibility

MLOps ensures consistency and repeatability in the training and deployment of machine learning models. It helps to track the environment and version of each model as well as the data used for training and evaluation. This helps to ensure that a model is reproducible and reliable.

Fast-time-to-market: MLOps simplifies and accelerates the end-to-end machine learning model development and deployment process. Automated workflows help to speed up the process of model building, testing and deployment. This helps to quickly launch new products and services with improved accuracy and better performance.

Monitoring

MLOps provides real-time monitoring of machine learning model performance and alerts if any discrepancies in accuracy or performance are detected. This helps to identify potential issues that could be affecting the model performance and take appropriate corrective actions.

Important Concepts in MLOps

To ensure that the data in your sistem is accurate and easily accessible, I need to introduce three important concepts.

Data Lineage

It is the ability to trace a piece of data back to its original source. It allows you to track exactly how data moved from source to target and who made each change along the way. The goal is to understand where your data comes from so that you can ensure it’s trustworthy and accurate.

Data Provenance

It is a concept that’s similar to data lineage, but it focuses more on how data is created and altered. The goal is to understand the history of a piece of information so that you can determine why it changed over time and what happened as a result.

Metadata

It is data about your data. It includes information like the location, type and size of a piece of information as well as who owns it and how it’s being used. In addition to helping you understand what data you have, metadata can help you better manage your system.

How does applying these concepts help your system?

- It helps ensure that models are built upon quality data, preserving the accuracy of the results.

- It helps with debugging and reproducing models, as it can be used to identify where issues occurred and how to reproduce results for further analysis.

- Data provenance and data lineage enable compliance and governance, improved transparency, and accountability.

- Help with reducing the time needed for data preparation and model deployment, as it provides insight into the data used to develop models.

Continuous Integration/Continuous Delivery (CI/CD)

The concepts of DevOps have direct application to MLOps. In software development, different developers do not work on the same code simultaneously. Instead, they check out code they’re going to work on from a code repository and then merge it back after their task is completed. Before returning the code, the developer verifies if anything in the main version has changed and runs unit tests on their updates before merging with the main code.

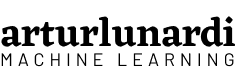

According to Park, C.; Paul, S.¹, in software engineering, Continuous Integration (CI) and Continuous Delivery (CD) are two very important concepts. CI is when you integrate changes (new features, approved code commits, etc.) into your system reliably and continuously. CD is when you deploy these changes reliably and continuously. CI and CD both can be performed in isolation as well as they can be coupled.

The more frequent the merges with the main code, the less likelihood of code divergence. This is known as Continuous Integration (CI) and can occur multiple times a day in a fast-paced development team.

Continuous Delivery (CD) involves building, testing, and releasing software in short cycles. This approach ensures that the main development code is always ready for production and can be deployed to the live environment at any moment. Without it, the main code is like a disassembled race car – fast but only after being put back together. Continuous Delivery can be performed either manually or automatically.

Continuous Training (CT)

In MLOps, continuous integration of source code, testing (unit and integration), and continuous delivery to production are crucial. But there is another important aspect to MLOps: data. ML models can deviate from their intended behavior when the data profile changes, unlike conventional software that consistently produces the same results. To address this, MLOps introduces the concept of Continuous Training (CT) in addition to continuous integration and continuous delivery. Continuous Training ensures that the ML model remains effective even as the data profile evolves.

Continuous training is the process of monitoring, measuring, retraining, and serving the models. MLOps also has significant differences from DevOps. In MLOps, continuous integration goes beyond testing and verifying code and components to include testing and verifying data, data schemas, and models. The focus shifts from a single software package or service to a system – the ML training pipeline – which should seamlessly deploy another service: the model prediction service. A unique aspect of MLOps is the automatic monitoring, retraining, and serving of machine learning models.

How to start implementing MLOps

Let’s discover how MLOps can help streamline the machine learning model lifecycle and optimize performance at each step.

During the discovery phase, the focus is on identifying potential use cases for machine learning and understanding the data and infrastructure requirements. During this stage we explore datasets, look for features and develop prototypes. MLOps can help automate and accelerate the development cycles here by automating the feature engineering and experimentation.

During the development phase, machine learning models are designed, trained, and evaluated. MLOps helps to facilitate this process by providing a centralized repository for ML models and their artifacts metadata. In addition, MLOps can help to monitor and analyze model performance by tracking metrics and setting alerts for any discrepancies.

During the deployment phase, machine learning models are deployed into production and monitored for performance and accuracy. MLOps can help to automate this process by orchestrating data pipelines, deploying models and services, ensuring that the system are reliable and the model meet certain criterias, such as deploy the model only if the accuracy of the new model is higher than the old one. MLOps also helps to maintain and scale these services in production.

Tools

In terms of tools, there are several MLOps tools that you can use, including:

- Jenkins, GitHub Actions and Cloud Functions for version control and CI/CD

- Docker or Kubernetes for containerization and orchestration

- TFX, Kubeflow, Apache Airflow and Cloud Composer for pipeline management

- Cloud services such as Google Cloud AI Platform, Google Cloud Vertex AI, AWS SageMaker or Microsoft Azure Machine Learning for machine learning infrastructure

- Data science and machine learning tools such as TensorFlow, PyTorch, Scikit-learn, XGBoost for model development

- Monitoring tools such as TensorBoard, Weights & Biases and Neptune AI for model performance monitoring and experiment management

In How I deployed my first machine learning model I detailed the process of building a ML Pipeline utilizing:

- TFX for pipeline management

- Neptune AI for experiment management

- Docker for containerization

- Vertex AI for pipeline deployment

- AI Platform for model training and serving

- Cloud Functions and GitHub Actions for version control and CI/CD

Hands-on Tutorials

- CI/CD for TFX Pipelines with Vertex and AI Platform – Develop a CI/CD pipeline for TFX Pipelines on Google Cloud – Vertex AI and monitor your model’s serving requests

- Sentiment Analysis with TFX Pipelines — Local Deploy – Build a local pipeline to deploy a sentiment analysis model with TFX Pipelines.

- Basic Kubeflow Pipeline From Scratch – Create a Kubernetes Cluster, containerize your code with Docker and create a Kubeflow Pipeline.

References

[1] Park, C.; Paul, S. Model training as a CI/CD system: Part I (6 October 2021), Google.

[2] Di Fante, A. L. CI/CD for TFX Pipelines with Vertex and AI Platform (18 October 2022), arturlunardi.

[3] Di Fante, A. L. Sentiment Analysis with TFX Pipelines — Local Deploy (18 October 2022), arturlunardi.

[4] Villatte, A. L. Basic Kubeflow Pipeline From Scratch (26 January 2022), Medium.

[5] Google Cloud Training. MLOps (Machine Learning Operations) Fundamentals [MOOC]. Coursera.

[6] MLOps: Continuous delivery and automation pipelines in machine learning (07 January 2020), Google.